Oracle SOA Suite 11g - Callback not reaching the calling service in a Clustered environment:

In Oracle SOA Suite 10g, we had a scenario like shown in the below diagram.

Process A

Process A calls Process B and waits for the call back; after receiving the call back; The Process A calls again the same Process B and waits for the second call back.

This flow is working fine in 10g but after migrating to 11g the second call back is not reaching the Process A.

In the SOA log file we could able to see the below error message -

an unhandled exception has been thrown in the Collaxa Cube systemr; exception reported is: "javax.persistence.PersistenceException: Exception [EclipseLink-4002] (Eclipse Persistence Services - 2.1.3.v20110304-r9073): org.eclipse.persistence.exceptions.DatabaseException

Internal Exception: java.sql.SQLIntegrityConstraintViolationException: ORA-00001: unique constraint (SOA_SOAINFRA.AT_PK) violated

Error Code: 1

Call: INSERT INTO AUDIT_TRAIL (CIKEY, COUNT_ID, NUM_OF_EVENTS, BLOCK_USIZE, CI_PARTITION_DATE, BLOCK_CSIZE, BLOCK, LOG) VALUES (?, ?, ?, ?, ?, ?, ?, ?)

bind => [851450, 5, 0, 118110, 2012-01-12 16:08:04.063, 5048, 3, [B@69157b01]

Query: InsertObjectQuery(com.collaxa.cube.persistence.dto.AuditTrail@494ca627)

at org.eclipse.persistence.internal.jpa.EntityManagerImpl.flush(EntityManagerImpl.java:744)

Internal Exception: java.sql.SQLIntegrityConstraintViolationException: ORA-00001: unique constraint (SOA_SOAINFRA.AT_PK) violated

Error Code: 1

Call: INSERT INTO AUDIT_TRAIL (CIKEY, COUNT_ID, NUM_OF_EVENTS, BLOCK_USIZE, CI_PARTITION_DATE, BLOCK_CSIZE, BLOCK, LOG) VALUES (?, ?, ?, ?, ?, ?, ?, ?)

bind => [851450, 5, 0, 118110, 2012-01-12 16:08:04.063, 5048, 3, [B@69157b01]

Query: InsertObjectQuery(com.collaxa.cube.persistence.dto.AuditTrail@494ca627)

at org.eclipse.persistence.internal.jpa.EntityManagerImpl.flush(EntityManagerImpl.java:744)

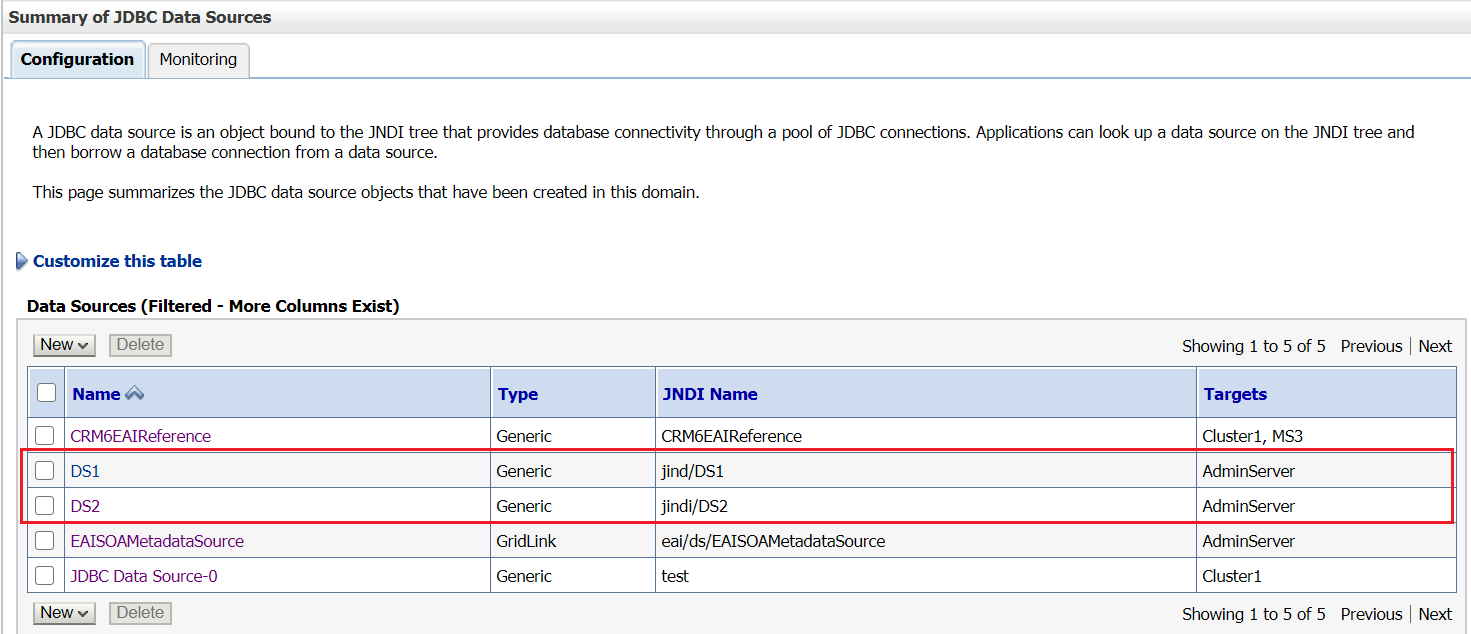

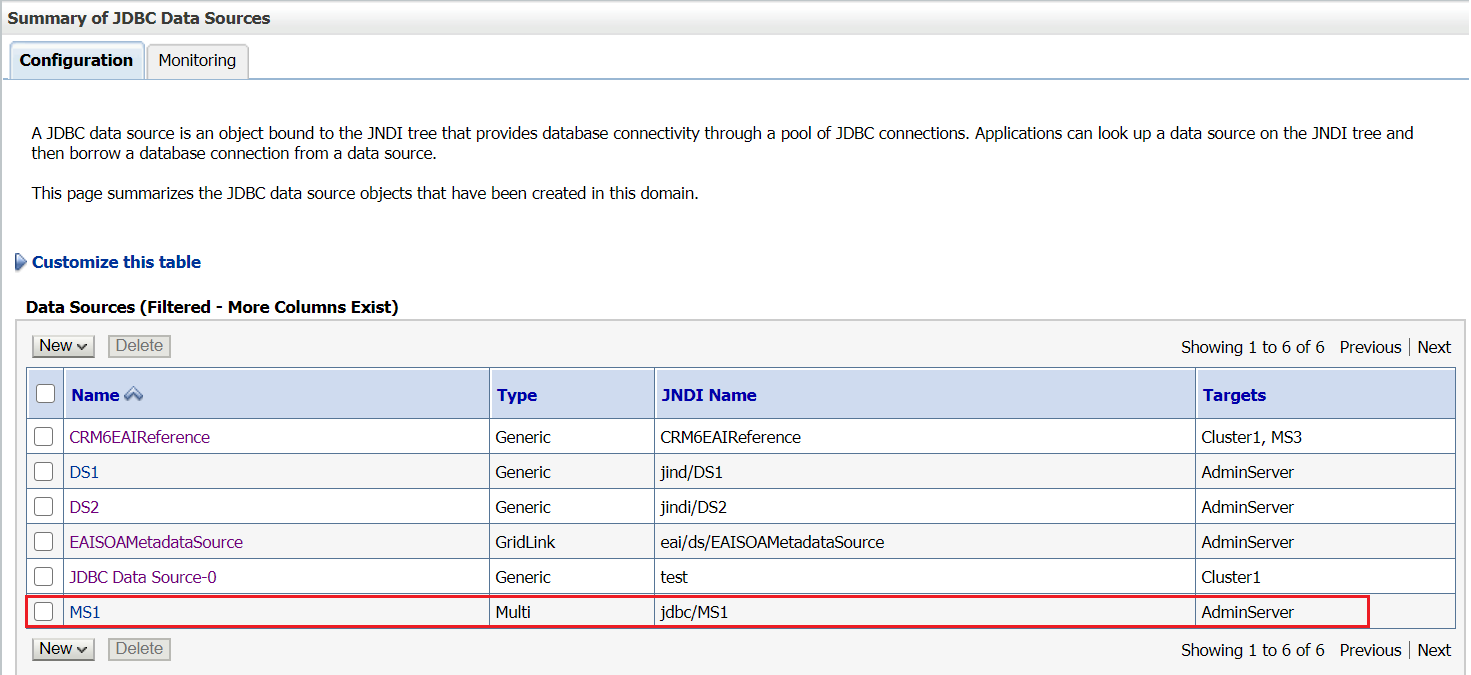

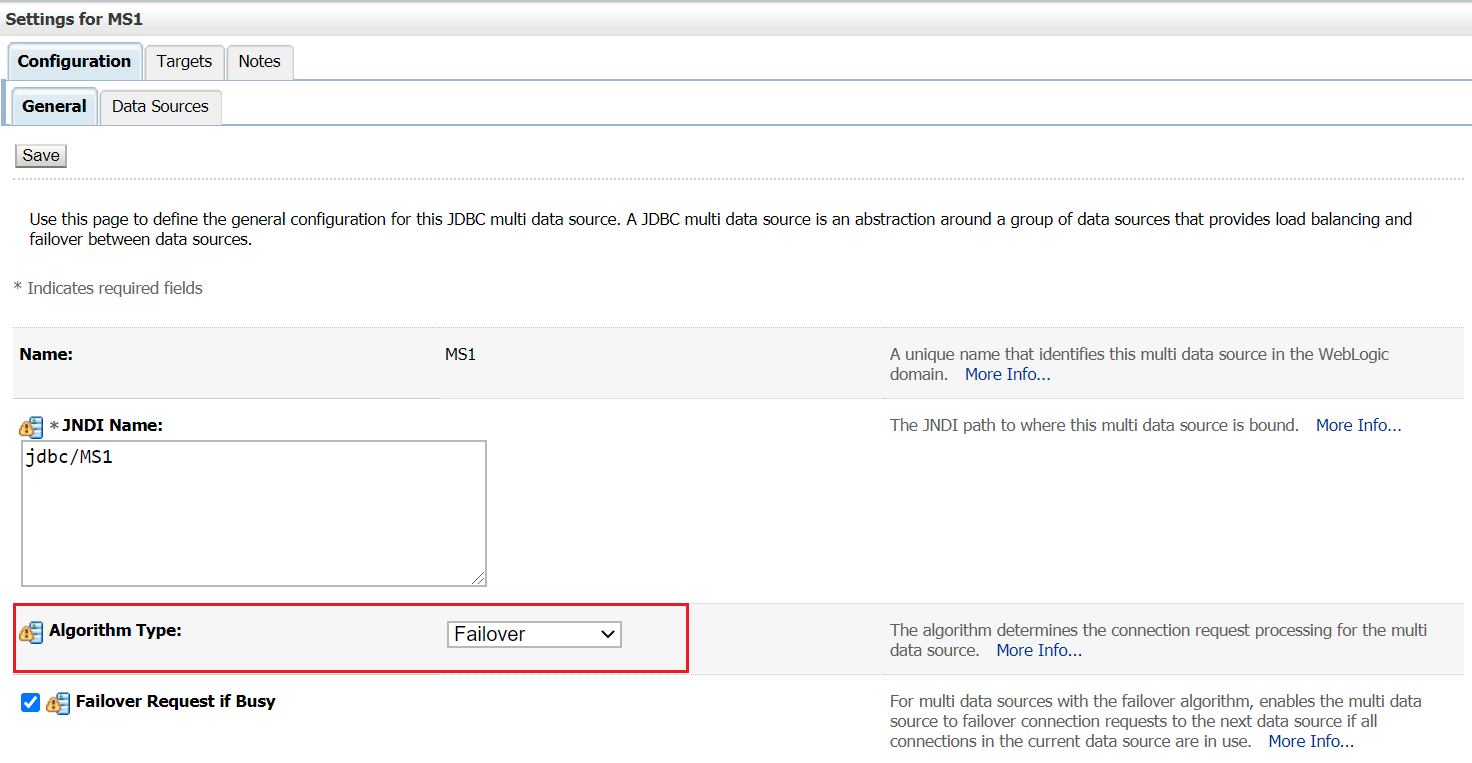

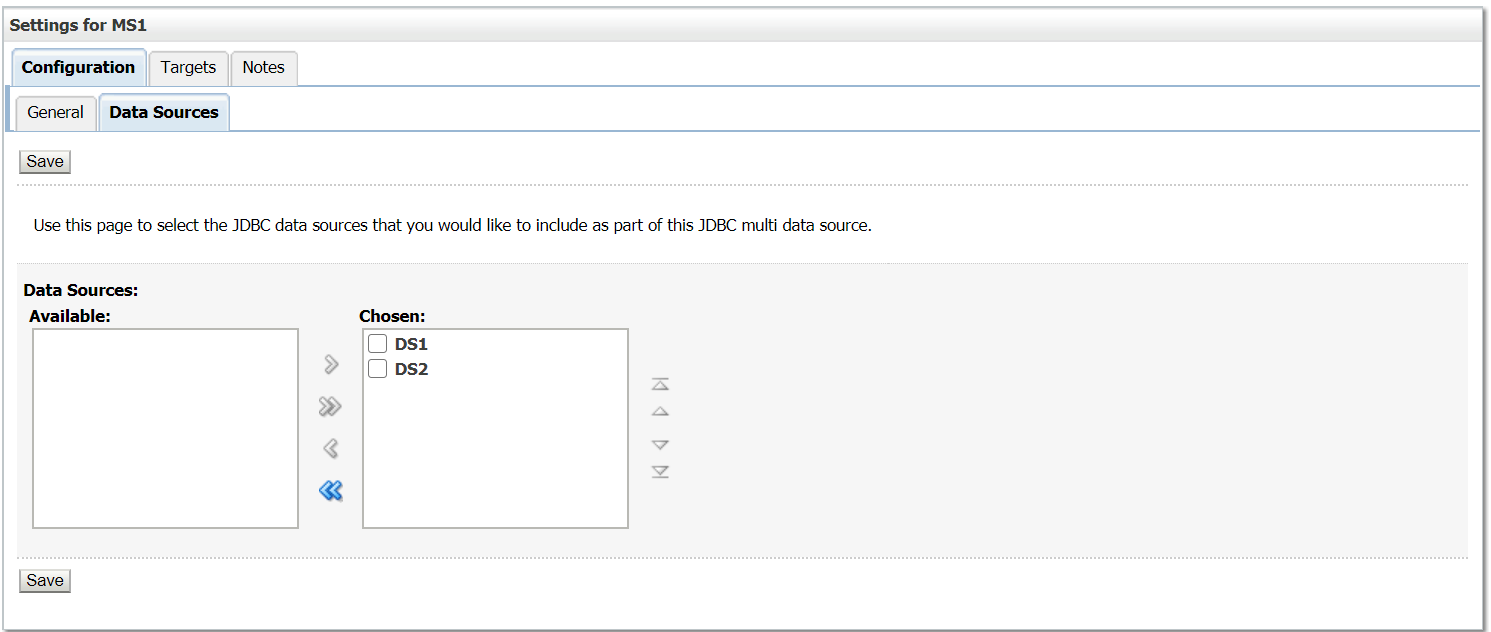

After the detail analysis and the Oracle SR, the root cause is “Audit Trail caching issue is causing the process not to get to complete due to the exception while doing the second call back”.

Let’s say in a Cluster with four nodes Process A first created on node 1 and carried on and called first call to Process B and dehydrates. Assume that second call hit a node other than node 1, let’s say node 4. Node 4 carries on doing the work and does the second call to Process B and dehydrates. If the second call back hits Node 1, rather than getting the latest audit trail from database, it’s using the cache and trying to update the audit trial with an id which node 4 already used. That’s throwing an exception and rolling back. For that reason the call back is not getting delivered.

As a temporary solution by switching of the audit trail for Process A, it doesn’t try to insert the data into audit trail and the transaction won’t get roll back and the call has reached successfully.

As a permanent solution, Oracle has provided the patch, the same can be download from the below path.

The issue identified in the SOA Suite version – 11.1.1.5.0