How to enable Basic Authentication for Non-Prod AEM websites? | Support Authenticated Performance Testing with Cloud Manager

Enable Basic Authentication:

Most of the time we will have the use case to enable basic authentication for non-prod AEM websites to avoid non authenticated users accessing the content(another option is through IP whitelisting) also avoiding the non-prod contents getting indexed through google search(another approach to avoid the indexing is through Robots meta tag)

Easy to use generic user name/password for every website so that only the users know those credentials can access the password(if you need more security go with site-specific users or individual users)

In AEM the basic authentication can be enabled quickly through Dispatcher(Apache)

Create a common configuration file for authentication - /conf.d/htaccess/authentication.conf

## unsets authorization header when sending request to AEM

RequestHeader unset Authorization

AuthType Basic

AuthBasicProvider file

AuthUserFile /etc/httpd/conf.d/htaccess/credential.htpasswd

AuthName "Authentication Required"

Require valid-user

Include this file into the individual Virtual Hosts

<Location />

<If "'${ENV_TYPE}' =~ m#(dev|uat|stage)#">

Include /etc/httpd/conf.d/htaccess/authentication.conf

</If>

</Location>

The ENV_TYPE can be set as an Environment variable e.g /etc/sysconfig/httpd (for AMS environment the required environment variables will be enabled by default)

ENV_TYPE='dev'

Create the credential file, execute the below command, you will be prompted to enter the password

htpasswd -c /etc/httpd/conf.d/htaccess/credential.htpasswd testuser

Execute the below command, to add additional users if required

htpasswd /etc/httpd/conf.d/htaccess/credential.htpasswd testuser1

Restart the Apache server, now basic authentication is enabled for the websites.

Authenticated Performance Testing with Cloud Manager:

https://www.albinsblog.com/2020/11/beginners-tutorial-what-is-cloud-manager-in-aem.html

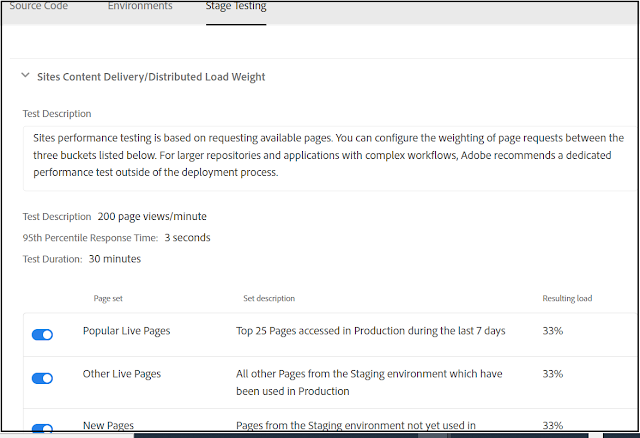

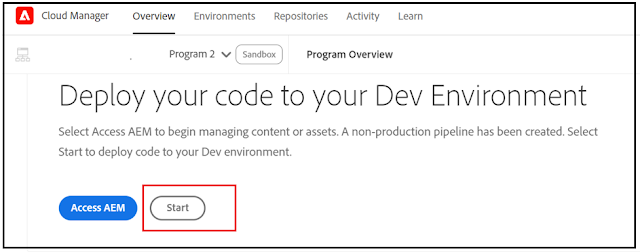

The Cloud Manager enables the Performance Testing on Stage environment after every successful deployment.

The list of Stage domains enabled for performance testing can be configured - this configuration is not supported through UI but needs to be enabled through the backend(Adobe team should be helping here)

Also, the required performance KPI's can be configured through Cloud Manager UI

Refer to the following URL for more details on performance testing - https://experienceleague.adobe.com/docs/experience-manager-cloud-manager/using/how-to-use/understand-your-test-results.html?lang=en#performance-testing

The performance testing will fail now due to the basic authentication enabled for the Stage websites, to overcome this the basic authentication credential should be configured in the Cloud Manager prod pipeline - the configuration cant be enabled through Cloud Manager UI but through Cloud Manager Plugin for the Adobe I/O CLI

This CLI supports two modes of authentication: Browser-based and Service Account. I am going to use browser-based authentication to enable the required configurations

As a prerequisite, ensure Node.js and NPM is installed in your machine, refer to the following URL for more details - https://www.npmjs.com/package/@adobe/aio-cli-plugin-cloudmanager

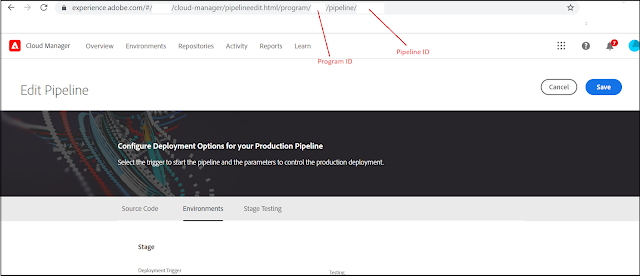

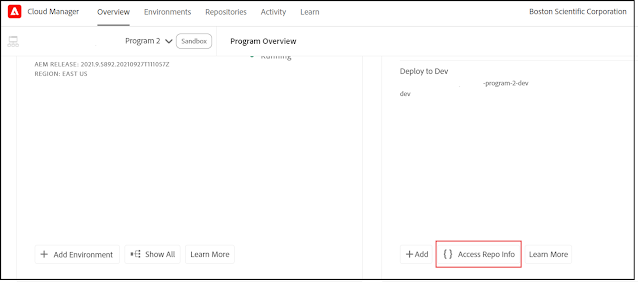

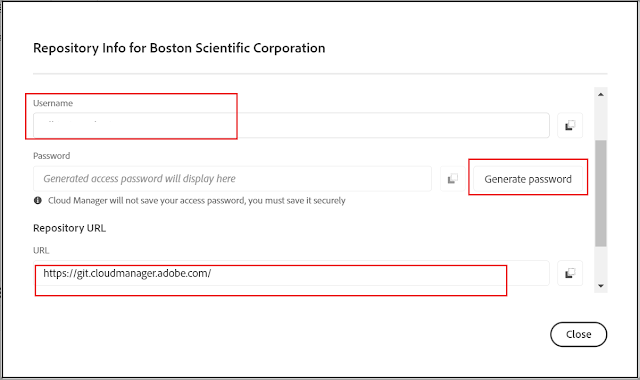

Identify the program ID and the production pipeline id by login to the Cloud Manager UI

Execute the below commands in your machine(ensure you have the required permissions)

npm install -g @adobe/aio-cli

aio plugins:install @adobe/aio-cli-plugin-cloudmanager

aio auth:login - login through browser with your Cloud Manager credentials

aio cloudmanager:org:select - select your organization where your program and the pipeline is configured(most of the case signle org)

aio config:set cloudmanager_programid PROGRAMID - Add your program ID

aio cloudmanager:set-pipeline-variables PIPELINEID--variable CM_PERF_TEST_BASIC_USERNAME testuser--secret CM_PERF_TEST_BASIC_PASSWORD pwdtestuser- Add your pipeline ID and the basic credentials

Now the basic authentication is enabled and the basic authentication credential are set while performing the performance testing.