How to enable search synonyms in AEM with Lucene?

This tutorial explains how to enable search synonyms in AEM with Lucene.

Search Synonyms

Synonyms are used to inform the search engine that searching for one word should also search for others e.g searching for gigabyte should also consider gigabytes, gib and gb etc

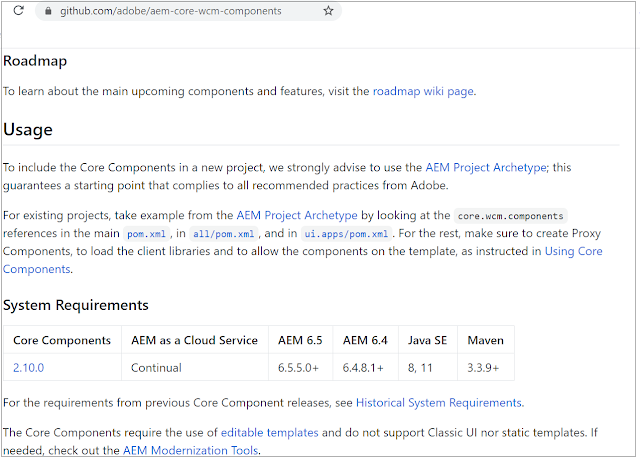

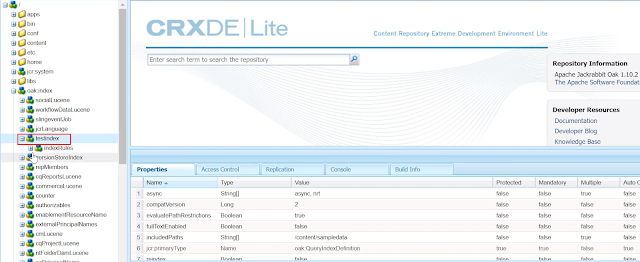

The analyzer should be configured for custom oak index to support the search synonyms.

Refer the following tutorial to configure custom oak index and analyzers

https://www.albinsblog.com/2020/04/oak-lucene-index-improve-query-in-aem-configure-lucene-index.html#.Xu7oD2hKjb1

https://www.albinsblog.com/2020/05/how-to-enable-case-insensitive-search-in-aem-lucene.html#.Xu7nAWhKjb1

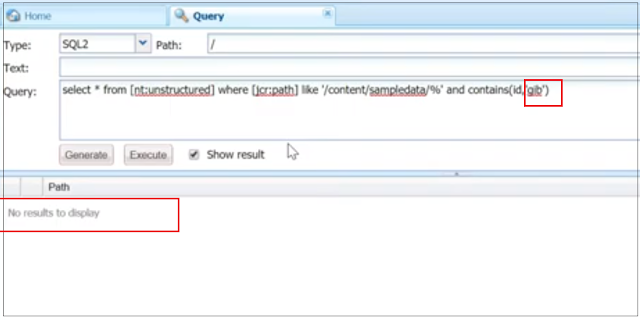

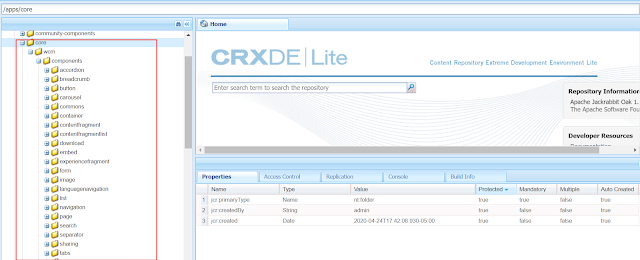

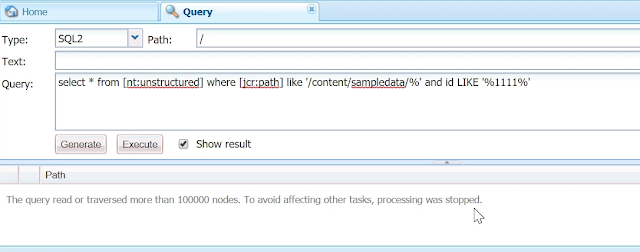

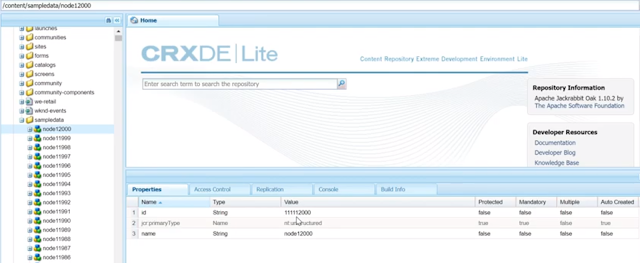

I have a data node with id property value as "gigabyte", the node will be returned while searching with the value "gigabyte" but not returned while searching with "gigabytes", "gib" or "gb".

|

Configure Analyzer

Let us now configure the Analyzer to support the search synonyms.

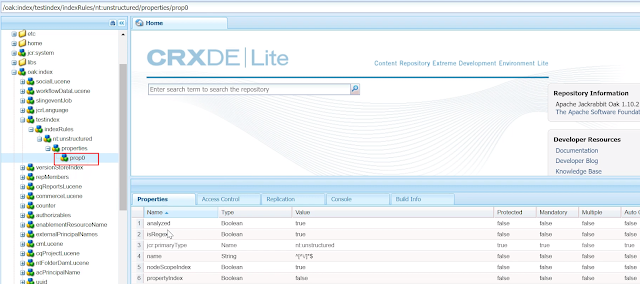

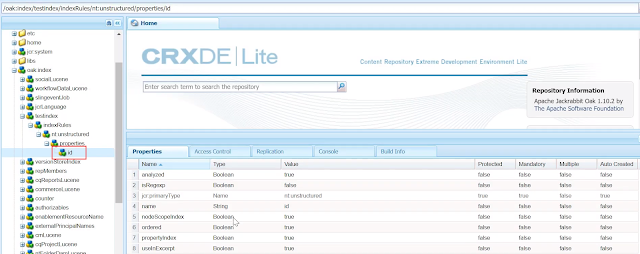

Create a node "Synonym" with the the primary type of "nt:unstructured" under analyzers\default\filters (refer the sample configuration package from the git link posted in the bottom of the tutorial)

Add the following properties to synonyms node

format - solr or wordnet

synonyms - synonyms.txt, file with synonyms definitions

There are two possible formats for the dictionary, I am using solr format for the demo

- wordnet: based on the popular Wordnet community. This required the synonyms configuration in specific format

- solr: it’s more plain text. Comma separated values

The synonym.txt file is a simple comma-separated list of synonyms. All matching terms should exist in a single row. Any word that is searched in the row will match all other words in that same row. Common uses for Synonyms are matching on variations of a word.

synonyms.txt

GB,gib,gigabyte,gigabytes

MB,mib,megabyte,megabytes

Television, Televisions, TV, TVs

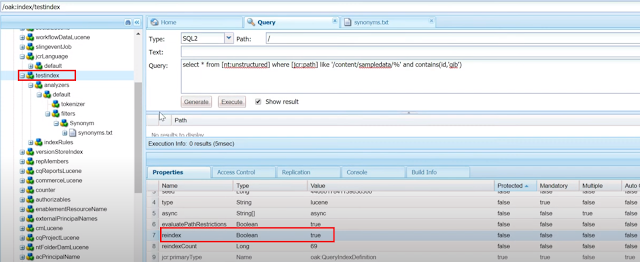

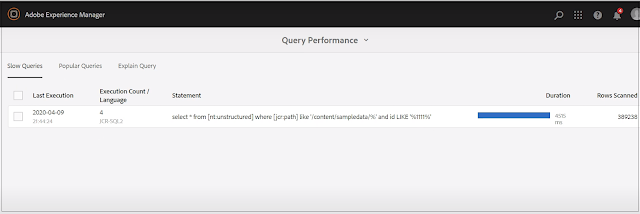

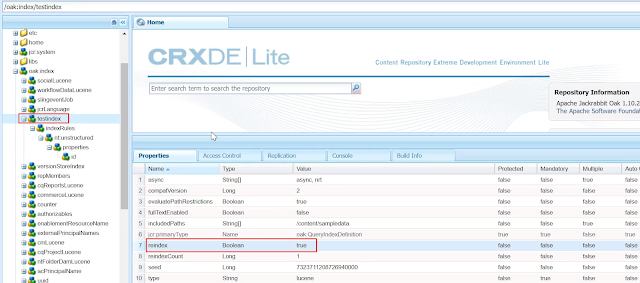

The configurations are ready now, let us re-index the data. Change the value of reindex property under the custom index to true - this will initiate the re-indexing, the property value will be changed to false once the re-indexing is initiated

Wait for few minutes to the index to complete

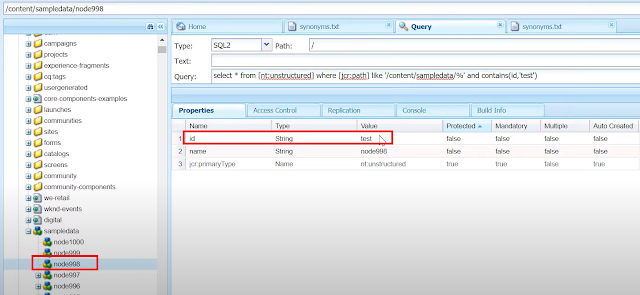

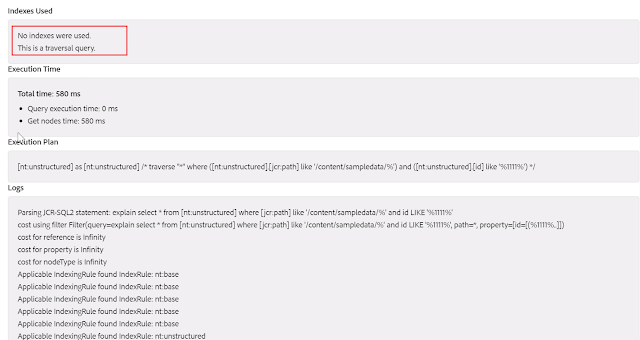

Let us now search with "gib" - there is no node with value "gib" for id property under /content/sampledata but "gib" is configured as a synonym for "gigabyte" so the node with id property value "gigabyte" will be returned as a result.

Synonyms Configuration - https://github.com/techforum-repo/youttubedata/tree/master/lucene